What Is Google Gemini?

Google Gemini is a large language model (LLM) developed by Google, designed to handle intricate tasks that require human-like cognitive abilities. Google Gemini uses a unique multi-modal architecture, and is the first LLM trained on textual, image, and video data combined. The goal is to make the model better able to address multi-modal queries and interactions.

Developed with an emphasis on adaptability and scalability, Gemini’s architecture allows for its deployment in various environments, ranging from cloud-based systems to edge devices. The Gemini series of models includes Gemini Ultra and Pro, intended for use in standard computing environments, Gemini Nano, built for resource-constrained environments, and Gemma, a series of open source LLMs created by Google.

What Can You Use Google Gemini For?

Google Gemini’s multi-modal capabilities support various applications. It can assist in content creation by generating text, images, or videos based on user input, making it useful for marketing, entertainment, and educational content. For example, users can input text descriptions and receive text together with suitable images.

In the field of customer service, Gemini can enhance interactions by providing more accurate and contextually relevant responses. Its ability to process and understand both text and visual data allows it to handle complex queries that involve multiple types of information (for example, images or screenshots of malfunctions sent by users). This can improve the quality of automated customer support systems without human intervention.

Google Gemini is also useful for research and data analysis. Its reasoning abilities enable it to sift through large volumes of text, image, and video data to extract insights. Researchers can leverage Gemini to automate the analysis of scientific papers, legal documents, and other extensive datasets.

Gemini Technical Capabilities

Key features of Google Gemini include:

- Multi-modal architecture: Includes a mix of Transformer and Mixture of Experts (MoE) models. Unlike traditional Transformers that operate as a singular large neural network, MoE models consist of numerous smaller "expert" neural networks. These expert networks are uniquely activated based on the specific type of input they receive, allowing the system to operate more efficiently by utilizing only the relevant pathways.

- Complex reasoning: Provides the ability to process and analyze extensive and varied data types. The system can handle tasks such as analyzing lengthy documents, images and videos, identifying intricate details and reasoning about the content comprehensively. It can manage multimodal inputs effectively, such as understanding and reasoning about silent films and identifying key plot points.

- Enhanced performance: Performs well across a range of benchmarks, including text, code, image, audio, and video evaluations. Its enhanced context window, capable of processing up to a million tokens, allows it to perform well in tasks that require detailed analysis within vast blocks of text or video content. Its in-context learning capabilities enable it to acquire new skills from a long prompt without the need for further fine-tuning.

- Extensive ethics and safety testing: It is built and maintained using tests designed to align with ethical AI principles and safety policies. The development team conducts thorough evaluations to identify and mitigate potential harms, employing techniques such as red-teaming to discover vulnerabilities. The software undergoes continuous updates and improvements in safety protocols.

Google Gemini vs. GPT 4

The table below provides a comparison between Google Gemini Ultra and GPT-4 across several key capabilities and benchmarks: general knowledge representation (MMLU), multi-step reasoning (Big-Bench Hard), reading comprehension (DROP), commonsense reasoning (HellaSwag), arithmetic manipulations (GSM8K), challenging math problems (MATH), and Python code generation (HumanEval and Natural2Code).

Notably, Gemini Ultra outperforms GPT-4 in several areas, particularly in arithmetic manipulations and Python code generation, while GPT-4 excels in commonsense reasoning tasks as indicated by the HellaSwag benchmark.

| Capability | Benchmark | Description | Gemini Ultra | GPT-4 |

|---|---|---|---|---|

| General | MMLU | Representation of questions in 57 subjects (incl. STEM, humanities, and others) | 90.0% (CoT@32*) | 86.4% (5-shot**) |

| Reasoning | Big-Bench Hard | Diverse set of challenging tasks requiring multi-step reasoning | 83.6% (3-shot) | 83.1% (3-shot API) |

| DROP | Reading comprehension (F1 Score) | 82.4 (Variable shots) | 80.9 (3-shot reported) | |

| HellaSwag | Commonsense reasoning for everyday tasks | 87.8% (10-shot*) | 95.3% (10-shot* reported) | |

| Math | GSM8K | Basic arithmetic manipulations (incl. Grade School math problems) | 94.4% (maj@32) | 92.0% (5-shot CoT reported) |

| MATH | Challenging math problems (incl. algebra, geometry, pre-calculus, and others) | 53.2% (4-shot) | 52.9% (4-shot API) | |

| Code | HumanEval | Python code generation | 74.4% (0-shot IT*) | 67.0% (0-shot* reported) |

| Natural2Code | Python code generation. New held out dataset HumanEval-like, not leaked on the web | 74.9% (0-shot) | 73.9% (0-shot API) |

Source: Google

Note: In May 2024, OpenAI released the GPT-4o model, which is fully multimodal, like Gemini. According to OpenAI, GPT-4o surpasses Gemini on most relevant benchmarks, except for its smaller context window, which is 128,000 tokens.

Learn more in our detailed guide to Google Gemini vs ChatGPT (coming soon)

Understanding Gemini Models

Here’s an overview of the Gemini versions available.

Gemini 1.5 Pro

Gemini 1.5 Pro is a mid-size multimodal LLM model. It is optimized for a wide range of reasoning tasks including code generation, text generation and editing, problem-solving, recommendation generation, information and data extraction, and AI agent creation.

This model can process extensive data inputs, such as up to one hour of video, 9.5 hours of audio, and large codebases with over 30,000 lines of code or 700,000 words. It supports zero-, one-, and few-shot learning tasks, allowing for flexible adaptation to various use cases.

Key properties include:

- Inputs: Audio, images, and text

- Output: Text

- Input token limit: 1,048,576

- Output token limit: 8,192

- Safety settings: Adjustable by developers

Gemini 1.5 Flash

Gemini 1.5 Flash is designed for fast and versatile multimodal tasks. It shares many capabilities with Gemini 1.5 Pro but is optimized for quicker performance across diverse applications.

Key properties include:

- Inputs: Audio, images, and text

- Output: Text

- Input token limit: 1,048,576

- Output token limit: 8,192

- Safety settings: Adjustable by developers

Gemini 1.0 Pro

Gemini 1.0 Pro is focused on NLP tasks, such as multi-turn text and code chat, and code generation. It supports zero-, one-, and few-shot learning, making it adaptable for various text-based applications.

Key properties include:

- Input: Text

- Output: Text

- Supported generation methods: Python (generate_content), REST (generateContent)

- Input token limit: 12,288

- Output token limit: 4,096

Gemini 1.0 Pro Vision

Gemini 1.0 Pro Vision is optimized for visual-related tasks, including generating image descriptions, identifying objects in images, and providing information about places or objects. It supports zero-, one-, and few-shot tasks.

Key properties include:

- Inputs: Text and images

- Output: Text

- Input token limit: 12,288

- Output token limit: 4,096

- Maximum image size: No limit

- Maximum number of images per prompt: 16

Gemma Open Models

The Gemma family of models includes lightweight LLM models derived from the Gemini technology. They can be customized using tuning techniques to excel at specific tasks. Gemma models are available in pretrained and instruction-tuned versions, allowing for tailored deployment based on specific needs.

Gemma models are not open source, but Google provides the source code and allows its free use under certain restrictions.

You can download Gemma models on Kaggle.

Key properties include:

- Model sizes: 2B and 7B parameters

- Platforms: Mobile devices, laptops, desktop computers, and small servers

- Capabilities: Text generation and task-specific tuning

Learn more in our detailed guide to Google Gemini Pro (coming soon)

Google Gemini Consumer Interfaces

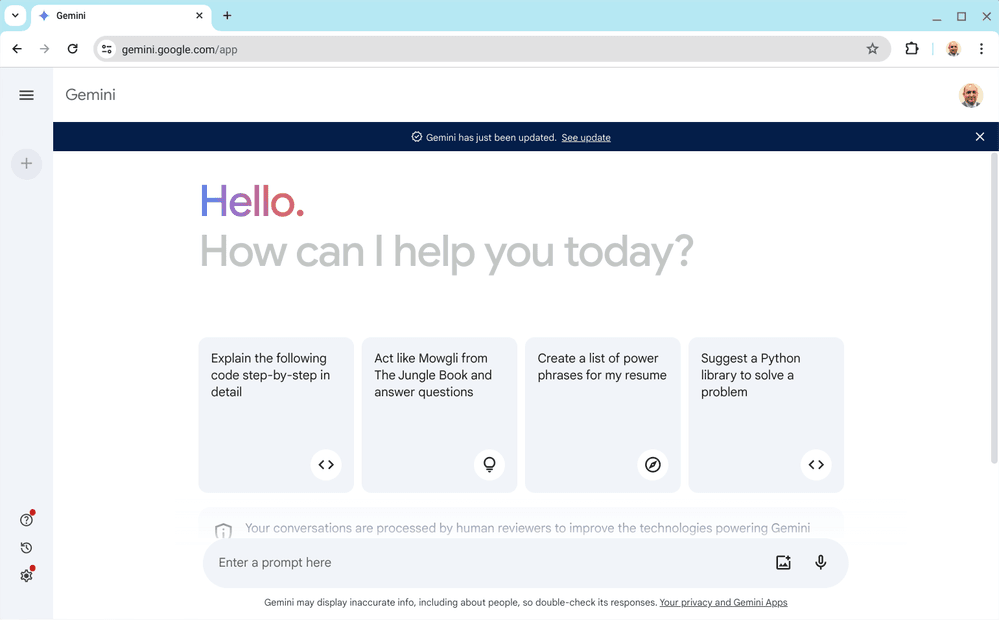

Web Interface

Google Gemini’s web interface provides users with an intuitive platform for interacting with the LLM. The interface is designed to support various input types, including text, image, and video, making it easy for users to perform complex tasks such as document analysis, image recognition, and video content summarization.

The web interface includes features like conversation history, real-time collaboration and integration with other Google services.

Direct link: https://gemini.google.com/

Source: Google

Mobile Application

The Google Gemini mobile application brings its LLM to smartphones and tablets. The app supports voice input, making it convenient for hands-free operation and dictation tasks. With features like offline mode and push notifications, it can be used even without a constant internet connection. The mobile app also syncs with the web interface, ensuring a consistent user experience across devices.

Direct link: https://gemini.google.com/app/download

Source: Google Play

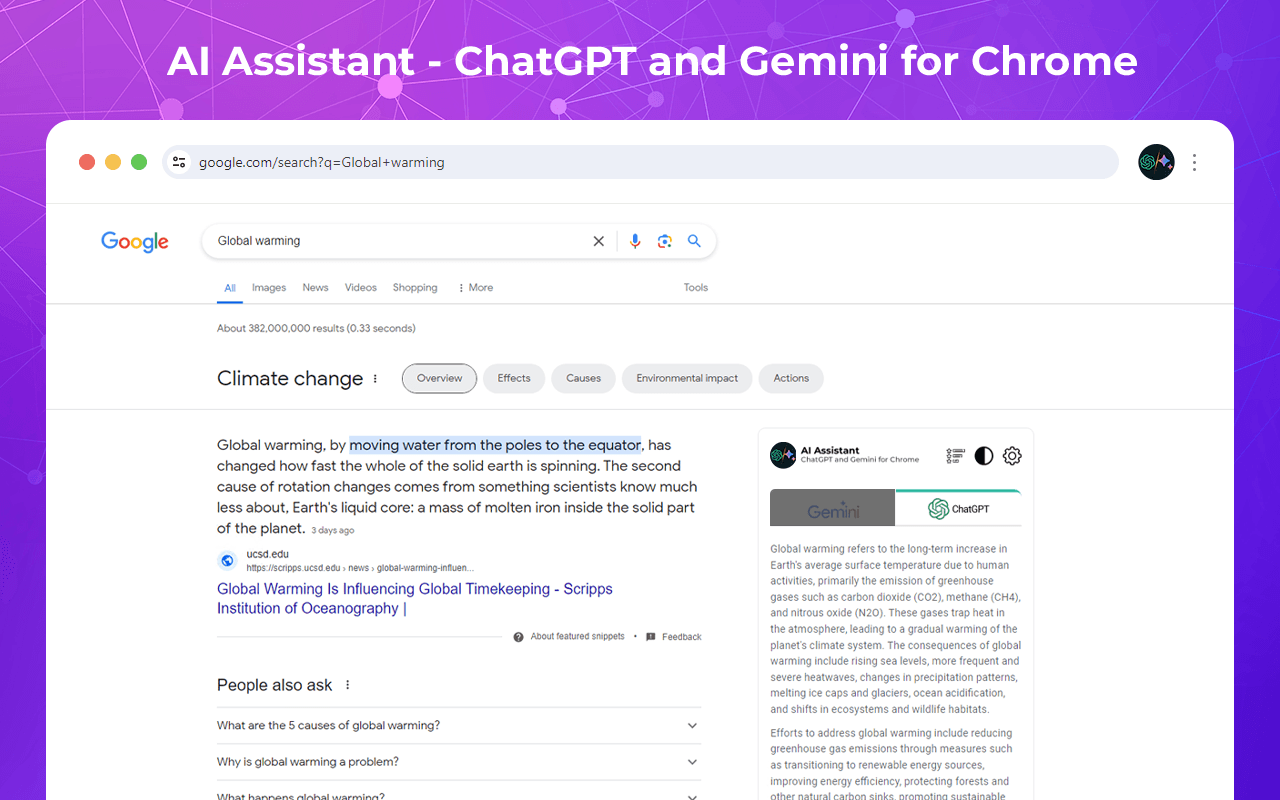

Integration with Chrome

Google Gemini integrates seamlessly with the Chrome browser, allowing users to leverage its capabilities directly from their web browsing environment. This enables tasks such as summarizing web articles, translating text, and generating content based on web page context.

In supported regions, new versions of the Chrome browser provide access to Gemini simply by typing @gemini in the address bar, followed by a specific prompt.

Direct link: https://chromewebstore.google.com/detail/gemini-for-google/iigenimmlpiicejkjbfpbanimcbmlfog?hl=en

Source: Chrome Web Store

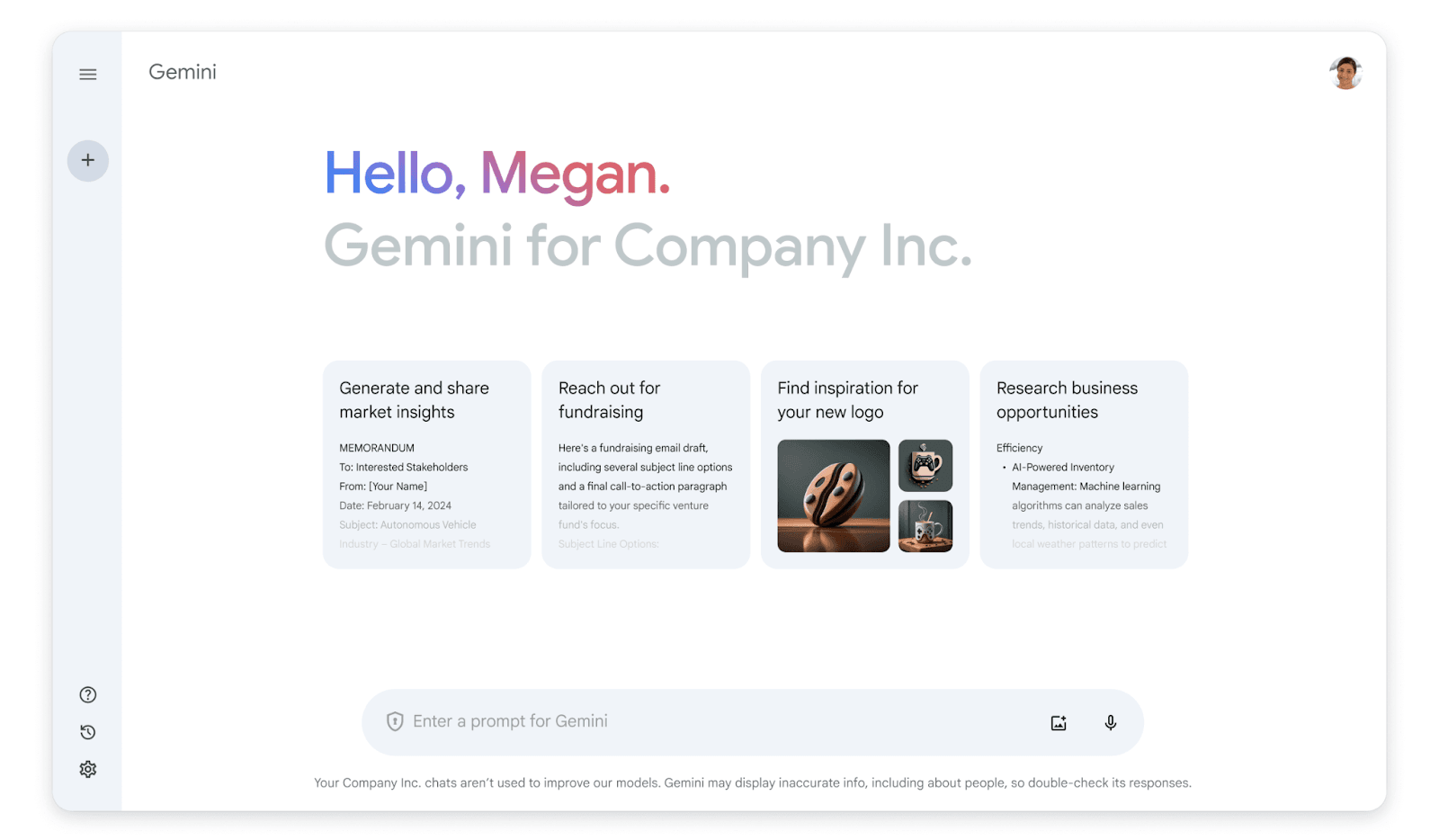

Google Workspace

Within Google Workspace, Google Gemini enhances productivity tools like Google Docs, Sheets, and Slides by offering advanced features such as automated content generation, data analysis, and visual content creation. For example, in Google Docs, users can generate entire paragraphs or reports based on brief prompts. In Google Sheets, Gemini can analyze complex datasets and provide insights or visualizations.

Direct link: https://workspace.google.com/solutions/ai/

Source: Google Blog

Google Gemini Developer Interfaces

Vertex AI

Google Gemini is integrated with Vertex AI, Google’s unified machine learning platform, enabling developers to build, deploy, and scale ML models more efficiently. Through Vertex AI, developers can access pre-trained Gemini models and customize them for specific use cases using Google’s suite of ML tools. This integration supports features like automated hyperparameter tuning, end-to-end pipeline orchestration, and model monitoring.

Learn more: Using Gemini 1.5 Pro and Flash in Vertex AI

Gemini API

The Gemini API provides developers with API endpoints to integrate Google Gemini into their applications. The API supports various tasks such as text generation, image analysis, and video processing. Developers can integrate the API with their software through SDKs available for multiple programming languages, including Python, JavaScript, and Swift.

Learn more: Gemini API overview

Gemini Consumer Pricing {#gemini-consumer-pricing}

Gemini is available as a standard or advanced version.

Gemini

The standard version of Gemini is free, providing access to the 1.0 Pro model. It aids in writing, learning, and planning and is integrated with Google applications.

Gemini Advanced

This version costs $19.99 per month, providing priority access to Gemini’s new features. It uses 1.5 Pro, with a context window of 1 million tokens, allowing users to upload PDFs, documents, and spreadsheets to obtain answers and summaries. It offers 2 TB of Google One storage and can be integrated into Gmail and Google Docs. Users can run and edit Python code using Gemini.

Gemini API Pricing

Gemini offers two pricing models: free and pay-as-you-go.

Gemini 1.0 Pricing

Free of Charge Model

This model is designed to provide users with a basic level of access to the Gemini 1.0 functionalities without any associated costs. Under this plan, users are allowed up to 15 requests per minute and a total of 32,000 tokens per minute, with a daily cap of 1,500 requests.

Pay-as-You-Go Model

For users who require more intensive usage, the "Pay-as-you-go" model offers a higher throughput and token limit to accommodate large-scale operations. This model charges $0.50 per 1 million tokens for input and $1.50 per 1 million tokens for output. It allows up to 360 requests per minute, 120,000 tokens per minute, and 30,000 requests per day.

Gemini 1.5 Pricing

Free of Charge Model

The free model for Gemini 1.5 provides users with access to its multimodal functionalities without any cost. Under this model, users can make up to 2 requests per minute and are allotted 32,000 tokens per minute, with a daily limit of 50 requests.

Pay-as-You-Go Model

This model caters to enterprises and users with more demanding requirements. This pricing model is based on the volume of data processed, charging $7 per 1 million tokens for input and $21 per 1 million tokens for output. It supports up to 10 requests per minute, 10,000 tokens per minute, and 2,000 requests per day.

Learn more in our detailed guide to Google Gemini pricing (coming soon)

Quick Tutorial: Getting Started with Google Gemini API

This tutorial shows how to use the Gemini API with Python. Additional SDKs are available for

Go, Node.js, Web, Dart (Flutter), Swift, and Android.

Prerequisites

To start using the Google Gemini API locally, ensure that your development environment is equipped with Python version 3.9 or later. Additionally, you should have Jupyter installed to run the notebook for interactive code execution and experimentation.

Set Up an API Key

An API key is essential to utilize the Google Gemini API. If you don’t have one yet, you can create a key through Google AI Studio.

Once you have your API key, it’s critical to manage it securely. Do not store your API key in your version control system. Instead, access your API key as an environment variable for enhanced security. Set this up by assigning your API key to an environment variable with the command: export API_KEY=<YOUR_API_KEY>.

Install the Gemini SDK

The SDK required to interact with the Gemini API is available as the google-generativeai package. You can install this package using pip with the command:

pip install -q -U google-generativeai

This will ensure that the SDK is downloaded and updated quietly, without flooding your terminal with logs.

Initialize Your Generative Model

The generative model can be initialized by importing the necessary module and configuring it with your API key. Start by importing the Google generative AI module in your Python script:

import google.generativeai as genai.

Then, configure your session to authenticate using your API key:

genai.configure(api_key=os.environ["API_KEY"]).

Lastly, initialize the specific model you intend to use, for example:

model = genai.GenerativeModel('gemini-pro').

Start Generating Text

With the model initialized, you can now generate text using the Gemini API. Here’s an example of how to use the model to generate content.

Call the generate_content method with a prompt, like writing a story about a surprise birthday party. For example:

response = model.generate_content("Write a story about a surprise birthday party.")

After generating the content, you can print out the response using:

print(response.text)

This will display the generated text in your terminal or Jupyter notebook.

Building Gemini Applications with Acorn

To download GPTScript visit https://gptscript.ai. As we expand on the capabilities with GPTScript, we are also expanding our list of tools. With these tools, you can build any application imaginable: check out tools.gptscript.ai and start building today.