In this post, we are going to explore the process of creating an interactive storybook that can take a prompt or a full story and transform it into an illustrated children’s book. We are going to achieve this using Nuxt 3 and GPTScript. The journey will be divided into two main parts:

- Crafting a GPTScript

- Integrating GPTScript into a web application

In part 2 of this post, we’ll be turning this code into a complete web application that can be hosted and used to view stories. The complete product will look like https://story-book-main.onrender.com/ and you can see the final source code here.

We have to start somewhere, so let’s get the basics done!

Getting Started

GPTScript

GPTScript is an innovative natural language scripting language that empowers you to prompt Large Language Models (LLMs) using tools that you have defined or imported.

Setup

Setting up GPTScript is quite simple. You can find a detailed guide here that will walk you through the steps. To give you a brief overview, all you need to do is install the CLI, set up any necessary API keys, and you’re good to go.

Introduction to Nuxt 3

Nuxt 3 is a comprehensive application framework that leverages the power of Vue to construct applications.

Setup

You can learn more about Nuxt 3 here. However, for the purpose of this tutorial, all you need to ensure is that you have Node installed, with a minimum version of v20.11.1.

Building the Story Book

Setting up the repo

Let’s start by setting up a Nuxt application. There are many options available during this process, and for this tutorial, most of them will work. Choose what fits you best, but we’ll be using npm for packaging. If you prefer to follow these commands closely, select npm.

npx nuxi@latest init

After generating our new repository, the next step is to install two crucial modules: gptscript and @nuxt/ui

The gptscript module allows us to easily execute GPTScripts, while the @nuxt/ui module provides user-friendly front-end components.

npm install @gptscript-ai/gptscript @nuxt/ui

Now, we need to include our Nuxt modules into nuxt.config.ts

Your file should look like the one below:

// <https://nuxt.com/docs/api/configuration/nuxt-config>

export default defineNuxtConfig({

devtools: { enabled: true },

modules: [

'@nuxt/ui',

'@nuxtjs/tailwindcss',

]

})

With our essential dependencies installed, we can now create some important files. It’s worth noting that larger LLM workloads, including GPTScript, can take some time to complete. Therefore, as we design our API, it’s essential to provide the user with as much feedback as possible about the process status. We’ll achieve this using SSE (server-side events) and the gptscript node module. With this in mind, let’s create our API routes:

mkdir -p server/api/story

mkdir pages

mkdir components

touch server/api/story/index.post.ts

touch server/api/story/sse.get.ts

touch pages/index.vue

In Nuxt, routing is file-based. In this case, we’ve created two API routes and a page to be served:

- server/api/story/index.post.ts: POST – http://localhost.com:3000/api/story

- server/api/story/sse.get.ts: GET – http://localhost:3000/api/story/sse

- pages/index.vue: GET – http://localhost:3000

The POST request allows us to create a story, while the GET request enables us to receive status updates. Essentially, we’ll make one request to execute a GPTScript and another to monitor it. Finally, our page will allow us to write some Vue to display the execution state.

Implementing the POST route

Acorn Labs has provided a handy Node module that handles the majority of the work in running our script. Although we’ll write our GPTScript in a different section, let’s assume for now that the file will be named story-book.gpt

Now, let’s get ready to delve into the code!

import gptscript from '@gptscript-ai/gptscript'

import { Readable } from 'stream'

// We need to define the request body that we'll receive from the user.

// In this case, we want a prompt and the number of pages.

type Request = {

prompt: string;

pages: number;

}

// Here's a type to store executions of running GPTScripts.

export type RunningScript = {

stdout: Readable;

stderr: Readable;

promise: Promise<void>;

}

// We're not using a database in this tutorial, so we'll simply store them in memory.

// It's all for the sake of simplicity and demonstration.

export const runningScripts: Record<string, RunningScript>= {}

// Now, we define the Nuxt handler. readBody and setResponseStatus are imported implicitly by Nuxt.

export default defineEventHandler(async (event) => {

const request = await readBody(event) as Request

// We need both prompt and pages to be defined in the body

if (!request.prompt) {

throw createError({

statusCode: 400,

statusMessage: 'prompt is required'

});

}

if (!request.pages) {

throw createError({

statusCode: 400,

statusMessage: 'pages are required'

});

}

// We call the GPTScript node module to run our (yet to be written) story-book.gpt file.

const {stdout, stderr, promise} = await gptscript.streamExecFile(

'story-book.gpt',

`--story ${request.prompt} --pages ${request.pages}`,

{})

// Since we're not done processing, we respond with a 202 to inform

// the client that we've received the request and are processing it.

setResponseStatus(event, 202)

// We add the running script to our in-memory store.

runningScripts[request.prompt] = {

stdout: stdout,

stderr: stderr,

promise: promise

}

})

Here’s a breakdown of what’s happening in the code:

- We define certain types to hold the request’s body and the response from the GPTScript module.

- A Nuxt handler for this route is established.

- The body is read as a Request type.

- We ensure the user has provided a prompt and has specified the number of pages for the story.

- The GPTScript’s node module is utilized to execute the script.

- Finally, we keep the running script in our in-memory store.

Implementing the SSE route

After successfully creating a route to initiate a GPTScript, the next step is to develop a pathway for tracking its progress. This is where Server Side Events (SSE) become useful. SSE allows us to stream updates from the GPTScript to the front-end in real-time. To ensure this works properly, we have to set specific headers and write updates to the newly opened stream as they arrive from the GPTScript. Now, let’s explore the code to see how we can accomplish this.

// Import the in-memory store for running scripts

import { runningScripts } from '@/server/api/story/index.post';

// Define the Nuxt handler. getQuery, setResponseStatus, and setHeaders are

// imported implicitly by Nuxt.

export default defineEventHandler(async (event) => {

// The prompt is the key to retrieve the value from the runningScripts

// store.

const { prompt } = getQuery(event);

if (!prompt) {

throw createError({

statusCode: 400,

statusMessage: 'prompt is required'

});

}

// Retrieve the running script from the store

const runningScript = runningScripts[prompt as string];

if (!runningScript) {

throw createError({

statusCode: 404,

statusMessage: 'running script not found'

});

}

// Since we found the script, we can go ahead and set the status to

// 200. Along with this we need to set specific headers that indicate

// that the connection should be kept alive and is an event stream.

setResponseStatus(event, 200);

setHeaders(event, {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Credentials': 'true',

'Connection': 'keep-alive',

'Content-Type': 'text/event-stream',

});

// Setup an event handler for new data being submitted to the stdoutBuffer.

let stdoutBuffer = '';

runningScript.stdout.on('data', (data) => {

stdoutBuffer += data;

// This ensures that we only write responses when they're terminated

// with a newline.

if (data.includes('n')) {

// Write the complete line to the event stream.

event.node.res.write(`data: ${stdoutBuffer}nn`);

stdoutBuffer = '';

}

});

// Setup an event handler for new data being submitted to the stderrBuffer.

let stderrBuffer = '';

runningScript.stderr.on('data', (data) => {

stderrBuffer += data;

// This ensures that we only write responses when they're terminated

// with a newline.

if (data.includes('n')) {

// Write the complete line to the event stream.

event.node.res.write(`data: ${stderrBuffer}nn`);

stderrBuffer = '';

}

});

// This ensures that Nuxt won't automatically handle the request.

event._handled = true;

// Wait for the promise to complete and whether its a success or

// error terminate the stream with a final message.

await runningScript.promise.then(() => {

event.node.res.write('data: donenn');

event.node.res.end();

}).catch((error) => {

setResponseStatus(event, 500);

event.node.res.write(`data: error: ${error}nn`);

event.node.res.end();

});

});

Alright, let’s unpack this a bit more!

- First, we import the in-memory store for running scripts. This is crucial as it helps us retrieve updates for any running script.

- Next, we define the Nuxt handler. This is where the magic happens!

- We then extract the prompt from the query parameters. Of course, if you’re dealing with larger values, you might want to consider replacing this with a body. However, for the purpose of this demonstration, we’ve chosen to use a query parameter.

- Afterward, we verify that the prompt has a corresponding key in the runningScripts store. If it doesn’t, we need to return an error to inform the user.

- Once we’ve confirmed the existence of the script, we set the response code and the necessary headers to implement Server-Sent Events (SSE). This is the part that allows us to stream updates from the GPTScript right to the front-end in real-time!

- Now comes the interesting part. We write out event handlers for both stdout and stderr. The responses are buffered, ensuring that only complete lines are sent to the front-end. These events are then written to the event stream.

- We then instruct Nuxt not to automatically resolve the request. This gives us more control over the process and allows us to handle any exceptions or errors that might occur.

- Lastly, once the promise resolves, we determine how to terminate and respond based on whether the operation was successful or not.

That’s it! With these steps, you’ve successfully set up the API and can begin work on writing the GPTScript to perform the generation.

Writing the GPTScript

Next, we will write a GPTScript for our API to execute.

tools: story-writer, story-illustrator, mkdir, sys.write, sys.read, sys.download

description: Writes a children's book and generates illustrations for it.

args: story: The story to write and illustrate. Can be a prompt or a complete story.

args: pages: The number of pages to generate

You are a story-writer. Do the following steps sequentially.

1. Come up with an appropriate title for the story based on the ${prompt}

2. Create the `public/stories/${story-title}` directory if it does not already exist.

3. If ${story} is a prompt and not a complete children's story, call story-writer

to write a story based on the prompt.

4. Take ${story} and break it up into ${pages} logical "pages" of text.

5. For each page:

- For the content of the page, write it to `public/stories/${story-title}/page<page-number>.txt and add appropriate newline

characters.

- Call story-illustrator to illustrate it. Be sure to include any relevant characters to include when

asking it to illustrate the page.

- Download the illustration to a file at `public/stories/${story-title}/page<page_number>.png`.

---

name: story-writer

description: Writes a story for children

args: prompt: The prompt to use for the story

temperature: 1

Write a story with a tone for children based on ${prompt}.

---

name: story-illustrator

tools: github.com/gptscript-ai/image-generation

description: Generates a illustration for a children's story

args: text: The text of the page to illustrate

Think of a good prompt to generate an image to represent $text. Make sure to

include the name of any relevant characters in your prompt. Then use that prompt to

generate an illustration. Append any prompt that you have with ". In an pointilism cartoon

children's book style with no text in it". Only return the URL of the illustration.

---

name: mkdir

tools: sys.write

description: Creates a specified directory

args: dir: Path to the directory to be created. Will create parent directories.

#!bash

mkdir -p "${dir}"

This document contains a lot of prompt engineering and GPTScript specific terminology. Let’s clarify each term:

- tools – This tells GPTScript what tools are available when executing the script. In this case, we’re providing access to several tools we’ve created: story-writer, story-illustrator, and mkdir, as well as some built-in system tools: sys.write, sys.read, sys.download.

- description – This provides the Language Model (LLM) with information about the tool’s function and usage.

- args – These are the values that the user supplies when running the script. Recall from our API that we pass pages and prompt here.

- Everything else until the next – – – (which marks the start of a new tool) comprises the script’s prompt. In this case, we’re giving the LLM details about its role and the process of generating a story. When run, the LLM will choose what tools to employ and how to use them.

- – – – – This marks the beginning of a new in-file tool. In this context, we’ve defined three tools (story-writer, story-illustrator, and mkdir).

- #!bash – This instructs the LLM to execute the following logic specifically using bash.

With our GPTScript finished, we’re reading to start building out the front-end!

Streaming Events to the Front-end

With our API ready, we can start building the front-end.

Understanding our UI stack (optional)

In the following sections, we will cover the user interface (UI) aspect of this web application. For further understanding, you can explore the three main technologies involved through the links below. However, we will only provide a brief overview of each for demonstration purposes.

- Vue – Nuxt uses this framework for component-based rendering. Read more about it here if you’re interested.

- TailwindCSS – This CSS library allows for styling through predefined classes it provides. The majority of the styling in this tutorial is done through TailwindCSS.

- @nuxt/ui – This plug-and-play component library for Nuxt provides many of the core components used in this tutorial. It’s a great tool for quickly designing impressive UI’s. Any component in this tutorial that starts with a U (like UCard or UButton) is from this library.

Please note that we will not be writing the code to display your rendered stories on the front-end. The implementation of this is relatively straightforward. Since the purpose of this tutorial is to learn how to interact with GPTScript at an API level, you can learn more about displaying rendered stories by visiting the story-book example in the GPTScript repo.

Getting our app.vue in order

First, let’s start by setting up our app.vue to render pages and be styled appropriately.

<!-- app.vue -->

<script lang="ts" setup>

// Sets up site header data.

useHead({

title: 'Story Book',

meta: [

{ charset: 'utf-8' },

{ name: 'apple-mobile-web-app-capable', content: 'yes' },

{ name: 'format-detection', content: 'telephone=no' },

{ name: 'viewport', content: `width=device-width` },

],

})

</script>

<template>

<div>

<div class="grid grid-rows-[1fc,1fr] fixed top-0 right-0 bottom-0 left-0">

<div class="overflow-auto border-t-2 border-transparent">

<div class="p-5 lg:p-10 max-w-full w-full h-full mx-auto">

<!-- Tells Nuxt to render the pages directory -->

<NuxtPage class="pb-10" />

</div>

</div>

</div>

</div>

</template>

The crucial part of this code is <NuxtPage/>. Adding this component tells Nuxt to render .vue files in the pages directory, using the same file-based routing format as the server directory.

Writing the index.vue

Next, let’s update our index.vue to include the necessary code for creating a story and rendering updates from GPTScript on the front-end.

<script setup lang="ts">

// Define the variables we will need for the script

const MAX_MESSAGES = 20;

const pendingStory = ref<EventSource>();

const pendingStoryMessages = ref<Array<string>>([]);

const pageOptions = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

const state = reactive({

prompt: '',

pages: 0,

})

// addMessage ensures that the number of messages in the pendingStoryMessages

// never exceeds the MAX_MESSAGSE

const addMessage = (message: string) => {

pendingStoryMessages.value.push(message)

if (pendingStoryMessages.value.length >= MAX_MESSAGES) {

pendingStoryMessages.value.shift();

}

}

// Create a function for the form's submit button to trigger

async function onSubmit () {

// Using Nuxt's fetch, submit a request to generate a new story

// to the backend by calling JSON.stringify on the form's state.

const response = await fetch('/api/story', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(state)

})

// When a new request is sent, clear the buffer.

pendingStoryMessages.value = []

if (response.ok) {

// On success, create a new EventSource for the specified prompt.

// When a message is recieved, push it into the stream. Look for the

// termination character we specified earlier with "done" or "error".

const es = new EventSource(`/api/story/sse?prompt=${state.prompt}`)

es.onmessage = async (event) => {

addMessage(event.data)

if ((event.data as string) === 'done') {

es.close()

pendingStory.value = undefined

} else if ((event.data as string).includes('error')) {

es.close()

pendingStory.value = undefined

addMessage(`An error occurred while generating the story: ${event.data}`)

}

}

pendingStory.value = es

} else {

// If an error occured, add it to the message buffer.

addMessage(`An error occurred while generating the story: ${response.statusText}`)

}

}

</script>

<template>

<div class="flex flex-col lg:flex-row space-y-10 lg:space-x-10 lg:space-y-0 h-full">

<UCard class="h-full w-full lg:w-1/2">

<template #header>

<h1 class="text-2xl">Create a new story</h1>

</template>

<UForm class="h-full" :state=state @submit="onSubmit">

<UFormGroup size="lg" class="mb-6" label="Pages" name="pages">

<USelectMenu class="w-1/4 md:w-1/6"v-model="state.pages" :options="pageOptions"/>

</UFormGroup>

<UFormGroup class="my-6" label="Story" name="prompt">

<UTextarea :ui="{base: 'h-[50vh]'}" size="xl" class="" v-model="state.prompt" label="Prompt" placeholder="Put your full story here or prompt for a new one"/>

</UFormGroup>

<UButton :disable="!pendingStory" size="xl" icon="i-heroicons-book-open" type="submit">Create Story</UButton>

</UForm>

</UCard>

<UCard class="h-full w-full lg:w-1/2">

<template #header>

<h1 class="text-2xl">GPTScript Story Generation Progress</h1>

</template>

<div class="h-full">

<h2 v-if="pendingStory" class="text-zinc-400 mb-4">GPTScript is currently building the story you requested. You can see its progress below.</h2>

<h2 v-else-if="!pendingStory && pendingStoryMessages" class="text-zinc-400 mb-4">Your story finished! Submit a new prompt to generate another.</h2>

<h2 v-else class="text-zinc-400 mb-4">You currently have no stories processing. Submit a prompt for a new story to get started!</h2>

<div class="h-full">

<pre class="h-full bg-zinc-950 px-6 text-white overflow-x-scroll rounded shadow">

<p v-for="message in pendingStoryMessages">> {{ message }}</p>

</pre>

</div>

</div>

</UCard>

</div>

</template>

- First, we initialize some necessary variables.

- MAX_MESSAGES – This variable sets a limit for the maximum number of messages that can be displayed on the front-end.

- pendingStory – This is a reactive reference to an EventSource that represents the current story being generated.

- pendingStoryMessages – This is a reactive reference to an array that will hold the messages streamed from the server.

- state – This is a reactive state object that holds the user’s input for the story prompt and the number of pages.

- Next, we create a function called addMessages that adds a new message to the pendingStoryMessages array and ensures that the array’s length never exceeds MAX_MESSAGES.

- Then we create onSubmit which is triggered when the form is submitted. This function sends a POST request to the server to create a new story. It also handles the server’s response, initiates the EventSource for tracking the story’s progress, and handles any errors that might occur during the process.

- Finally, we write out the Vue template that will actually render all of the processed data from the previous steps. This includes a form for user input and a display area for the messages streamed from the server.

Testing it out

After preparing everything, we can begin testing. First, set the OPENAI_API_KEY and then start the local Nuxt development server.

export OPENAI_API_KEY=<your key here>

npm run dev

Pro tip: If you want to set your OPENAI_API_KEY securely, you can use a .env file and source.

echo "OPENAI_API_KEY=" > .env

open .env # add your key in this file

source .env

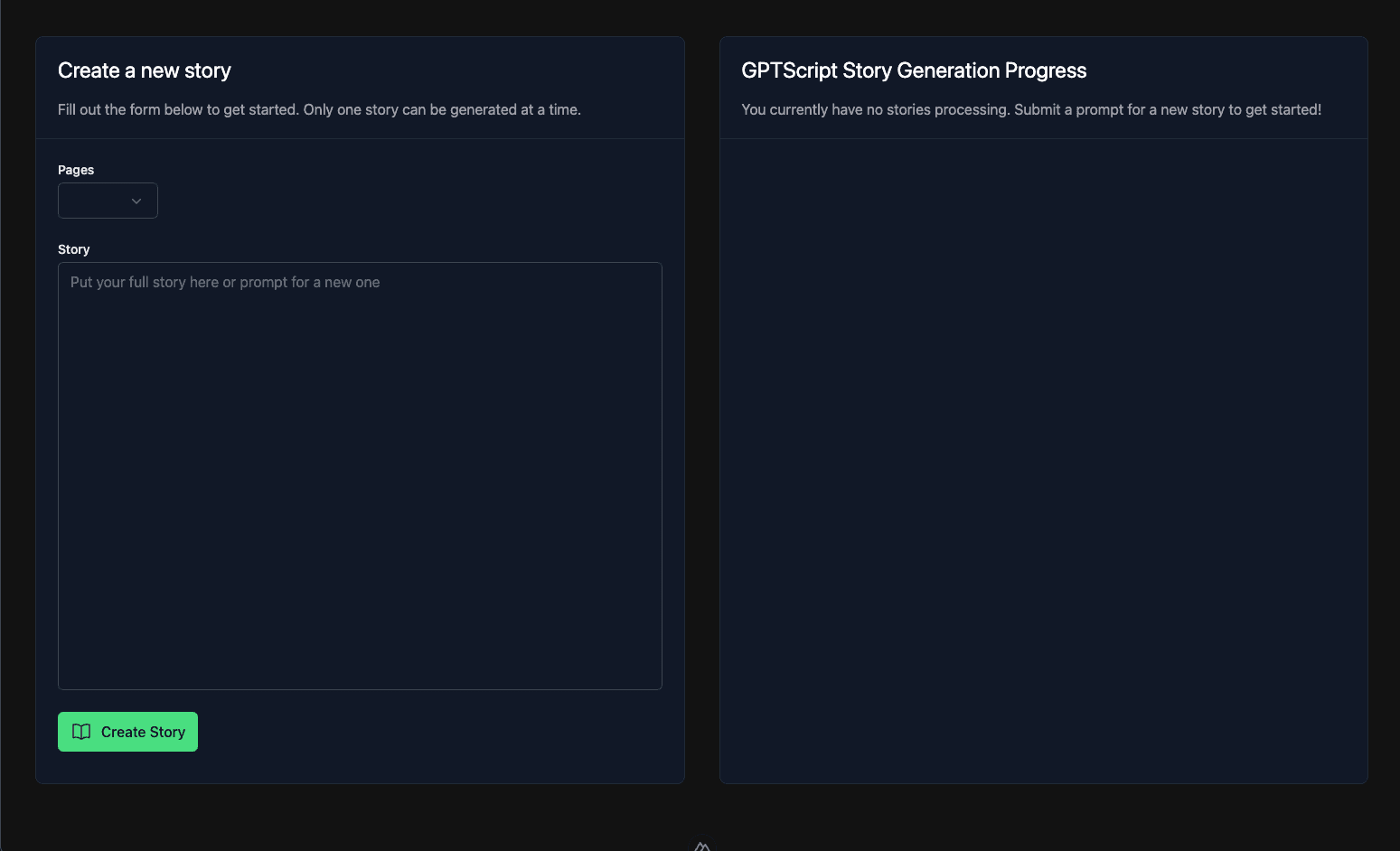

The application will start at localhost:3000. Opening this address in your browser will display the following interface.

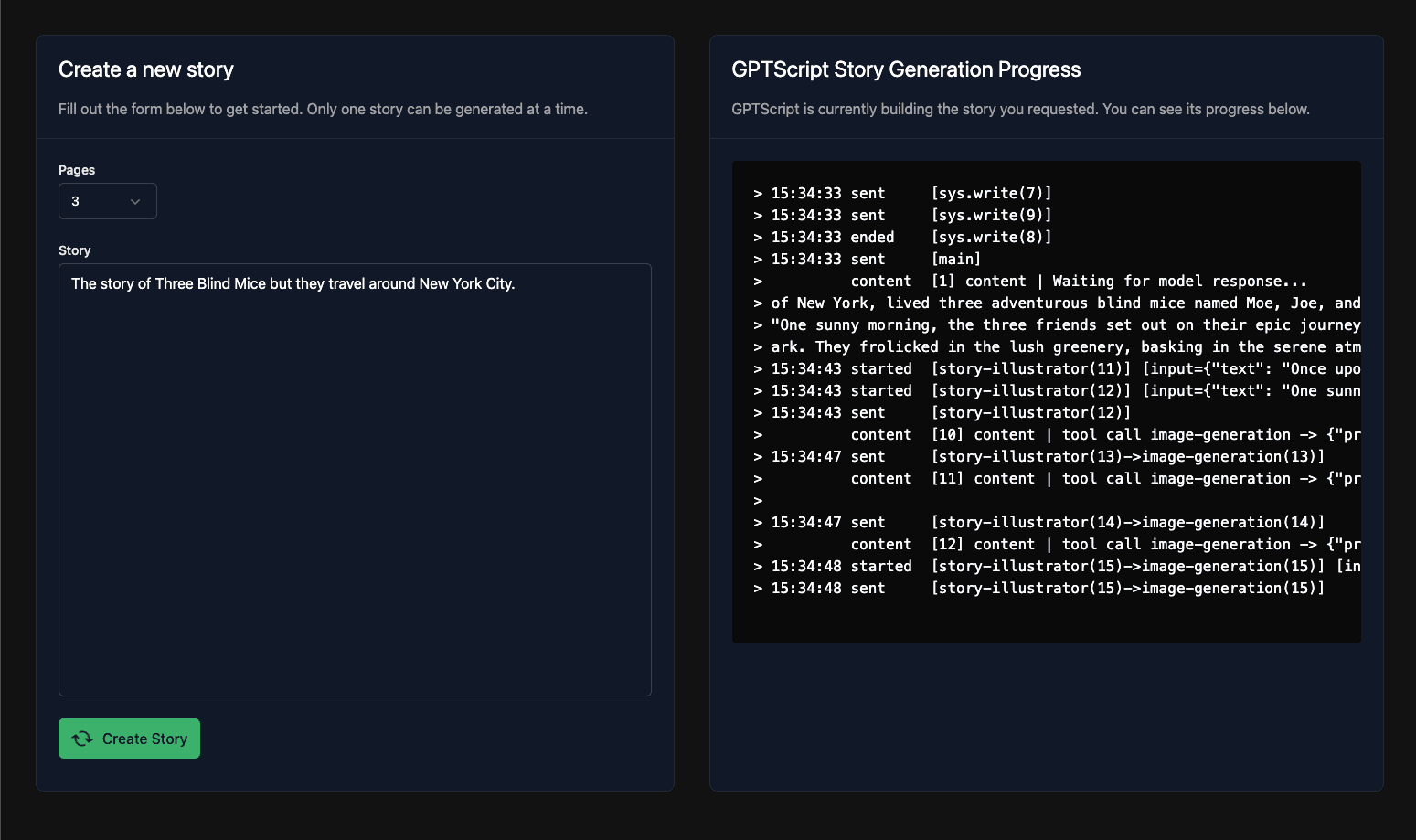

When we enter a story and submit, we can see the updates stream on the right-hand side.

Once completed, the UI will indicate that the process is finished. You can then view the generated files in the public/stories directory where the LLM has written everything.

With that, we’re done with this part.

Wrapping up

Congratulations! If you’ve made it this far, you now have an understanding of how to integrate and use GPTScript effectively within your own set of APIs. This guide has delved into the use of the GPTScript node module, a powerful tool for programmatically streaming updates to a front-end. Next time we’ll be diving into tidying up our code, rendering the story, and making this a fully deployable web application.

If you’re interested in further expanding your knowledge, connecting with others using GPTScript, or learning about the development of GPTScript, we strongly recommend visiting our GitHub repository.

If you’d like to chat about building your own projects using GPTScript, schedule a conversation.