Welcome to the third part of the tutorial series: Harnessing AI to Become a DevOps Pro! In this series we enable you to master DevOps fundamentals using the help of our AI assistant Clio as your instructor.

We will be exploring 8 key areas of DevOps:

- Version control Systems (VCS)

- Continuous Integration/Continuous Deployment (CI/CD)

- Containerization

- Orchestration

- Infrastructure as Cloud (IaC)

- Cloud

- Monitoring and Logging

- Security

All you need to get started is some basic programming knowledge: to start from the beginning check out the intro guide here.

Follow along each week as we release detailed guides to each of the topics above.

In the third part of this DevOps with AI series, I’m going to learn about containerization. According to my teacher, Clio, that is all about packaging applications and their dependencies into containers. This is done to ensure consitency between your development and production. The tool we’re going to focus on in this part will be Docker.

What is Clio? Learn more about Clio, our AI copilot that runs in your CLI, here.

What is docker?

I think that the most obvious place to begin is to find out more about what Docker actually is, and how it works. I will ask my favorite teacher this question 🙂

>>> Can you briefly explain to me what Docker is and how it works?

Certainly! Docker is an open-source platform that automates the deployment, scaling, and management of applications using containerization. It allows developers to package applications and their dependencies into a standardized unit called a

container. Here's a brief overview of how Docker works:

### Key Concepts:

1. Containers: Containers are lightweight, portable, and self-sufficient units that include everything needed to run a piece of software, including the code, runtime, libraries, and system tools. They run consistently across different

environments, from development to production.

2. Docker Images: A Docker image is a read-only template used to create containers. It includes the application code, libraries, and dependencies. Images are built from a Dockerfile, which is a script containing a series of instructions on

how to build the image.

3. Docker Engine: This is the core part of Docker, responsible for running and managing containers. It includes a server (a long-running daemon process), a REST API for interacting with the daemon, and a command-line interface (CLI) client.

4. Dockerfile: A text file containing a series of commands and instructions to assemble a Docker image. It specifies the base image, application code, environment variables, and other configurations.

5. Docker Hub: A cloud-based registry service where Docker users can share and distribute container images. It acts as a repository for Docker images.

### How Docker Works:

1. Build: Developers write a Dockerfile and use the docker build command to create a Docker image. This image contains the application and its dependencies.

2. Ship: The Docker image is pushed to a registry like Docker Hub, making it available for others to download and use.

3. Run: Using the docker run command, the Docker image is instantiated as a container on any system with Docker installed. The container runs the application in an isolated environment.

4. Manage: Docker provides tools to manage the lifecycle of containers, including starting, stopping, and scaling them as needed.

Docker simplifies the development and deployment process by ensuring that applications run the same way in different environments, reducing the "it works on my machine" problem. It's widely used in DevOps for continuous integration and

continuous deployment (CI/CD) pipelines, microservices architecture, and more.

If you have any specific questions or want to try out some Docker commands, feel free to ask!

That’s a nice and concise answer. I have used Docker a little bit in the past, so I can confirm that what she tells me is correct here. I like the fact that she finds these concepts and writes a short, but easy to understand explanation to each of them.

I think I’m ready for the next step here.

Before we continue, if you’d like to follow along in an on-demand format, check out this accompanying video I created for Part 3: Containerization.

<iframe width=”560″ height=”315″ src=”https://www.youtube.com/embed/PsMMLAZCQW8?si=UoH3ULSxmygM-7uX” title=”YouTube video player” frameborder=”0″ allow=”accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share” referrerpolicy=”strict-origin-when-cross-origin” allowfullscreen></iframe>

My first container

As I said, I have used Docker before, so it’s not actually my first image. But I want her to treat me like it’s the first time. Let’s try to make her come up with a hands on task so that we can learn more and test it out for our self.

>>> Could you come up with a task so that we can get some hands on experience while learning more about Docker? Preferably something with Python.

Absolutely! Let's create a simple Python web application using Flask, package it into a Docker container, and run it. This will give you hands-on experience with Docker, including writing a Dockerfile, building an image, and running a

container.

### Task: Dockerize a Simple Flask Application

#### Step 1: Set Up a Simple Flask Application

1. Create a new directory for your project, e.g., flask-docker-app .

2. Inside this directory, create a file named app.py with the following content:

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello_world():

return 'Hello, Docker!'

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

3. Create a requirements.txt file to specify the dependencies:

Flask==3.0.0

Once the image is built, run the container using the following command:

docker run -p 5000:5000 flask-docker-app

• This command maps port 5000 on your host to port 5000 in the container, allowing you to access the Flask app.

#### Step 5: Test the Application

• Open a web browser and go to http://localhost:5000 . You should see the message "Hello, Docker!".

This task will help you understand the basics of Dockerizing a Python application. Let me know if you need any help with these steps or if you want to explore more advanced Docker features!

Okay, I have now created the folder and the app.py with the Flask app inside. I think something might be missing from the answer, because step 2-4 is missing and she’s asking me to run docker after it’s built. But there are no build commands or Docker file. I have to ask what’s up here 🙂

>>> It looks like some steps of the answer is missing here. Can you show me step 2-4 again?

Certainly! Let's go through Steps 2 to 4 again for Dockerizing the Flask application.

### Step 2: Write a Dockerfile

1. In the same directory as your app.py and requirements.txt , create a file named Dockerfile with the following content:

# Use the official Python image from the Docker Hub

FROM python:3.9-slim

# Set the working directory in the container

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install the dependencies

RUN pip install --no-cache-dir -r requirements.txt

# Make port 5000 available to the world outside this container

EXPOSE 5000

# Define the command to run the application

CMD ["python", "app.py"]

This Dockerfile specifies the base image, sets the working directory, copies the application code, installs dependencies, exposes a port, and defines the command to run the app.

### Step 3: Build the Docker Image

• Open a terminal, navigate to your project directory, and run the following command to build the Docker image:

docker build -t flask-docker-app .

This command builds the Docker image and tags it as flask-docker-app . The . at the end specifies the current directory as the build context.

### Step 4: Run the Docker Container

• Once the image is built, run the container using the following command:

docker run -p 5000:5000 flask-docker-app

This command starts a container from the flask-docker-app image and maps port 5000 on your host to port 5000 in the container, allowing you to access the Flask app from your browser.

### Step 5: Test the Application

• Open a web browser and go to http://localhost:5000 . You should see the message "Hello, Docker!".

These steps will help you build and run a Docker container for a simple Flask application. Let me know if you have any questions or need further assistance!

Okay, that was much better. I saw this while she was printing them out earlier, but somehow the answer just collapsed.

I now understand that some things that was mentioned in the introduction post was so important. For example the parts about basic command line skills and programming skills. Since I have both of these, following everything she says here is easy to me. Not only following it, but I actually understand it.

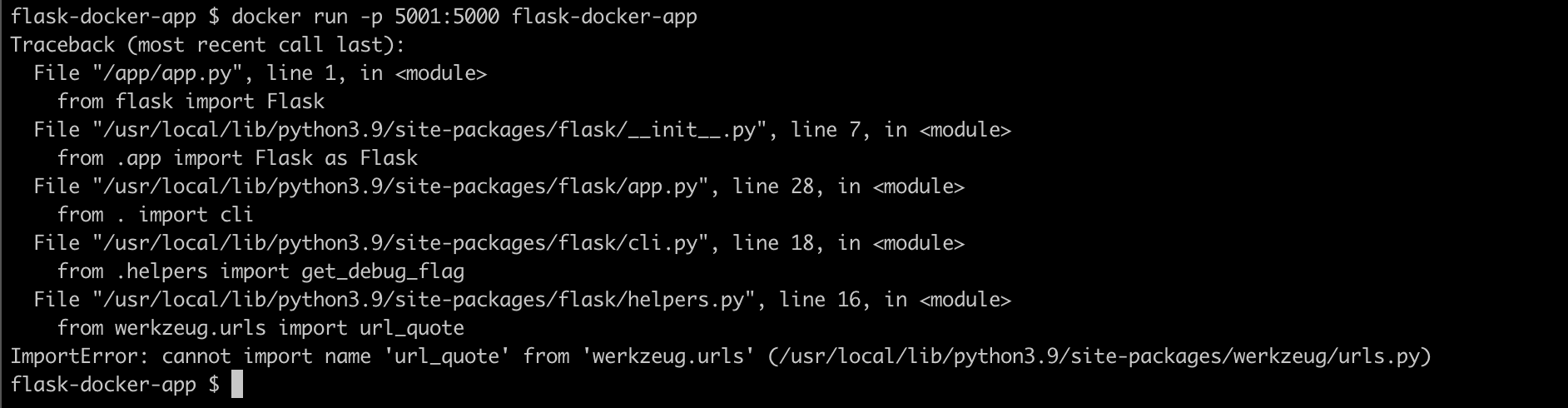

The Dockerfile is ready and I have built the image. Next step is to run the app, but I suspect that it will crash. I think it will crash because I know there’s something wrong with the Flask app. But let’s try it anyways.

Let me ask my teacher about this. I know that "werkzeug" is a dependency on Flask, so we need to install it. To make it easy, I just copy and paste the error direcly into Clio:

ImportError: cannot import name 'url_quote' from 'werkzeug.urls' (/usr/local/lib/python3.9/site-packages/werkzeug/urls.py)

The error you're encountering is due to a change in the Werkzeug library, which is a dependency of Flask. The url_quote function has been removed or relocated in recent versions of Werkzeug. Here's how you can resolve this issue:

### Solution 1: Update Your Code

If you're maintaining the code, you should update it to be compatible with the latest version of Werkzeug. The url_quote function has been replaced by quote from the urllib.parse module in Python's standard library. Here's how you can

modify your code:

1. Replace the Import: Instead of importing url_quote from werkzeug.urls , import quote from urllib.parse :

from urllib.parse import quote

2. Use quote Instead of url_quote: Replace any usage of url_quote with quote .

### Solution 2: Pin Werkzeug to an Older Version

If you cannot update the code immediately, you can pin Werkzeug to an older version that still includes url_quote . Add a specific version to your requirements.txt :

1. Modify requirements.txt: Add Werkzeug with a version constraint:

Flask==3.0.0

Werkzeug==3.0.1

2. Rebuild the Docker Image: After updating requirements.txt , rebuild your Docker image to install the specified version of Werkzeug:

docker build -t flask-docker-app .

### Solution 3: Update Flask and Dependencies

Ensure that all your dependencies are up-to-date and compatible with each other. Sometimes, updating Flask and its dependencies can resolve such issues:

1. Update requirements.txt: Ensure you have the latest versions of Flask and Werkzeug:

Flask

Werkzeug

2. Rebuild the Docker Image: Rebuild your Docker image to install the latest versions:

docker build -t flask-docker-app .

Choose the solution that best fits your situation. If you have control over the code, updating it to use urllib.parse.quote is the most future-proof solution. Let me know if you need further assistance!

I’m going to go with solution number 2. I like Werkzaug. She’s asking me to insert "Werkzeug<2". This is a bit old version of the package, so I changed it to "Werkzeug>2.0".

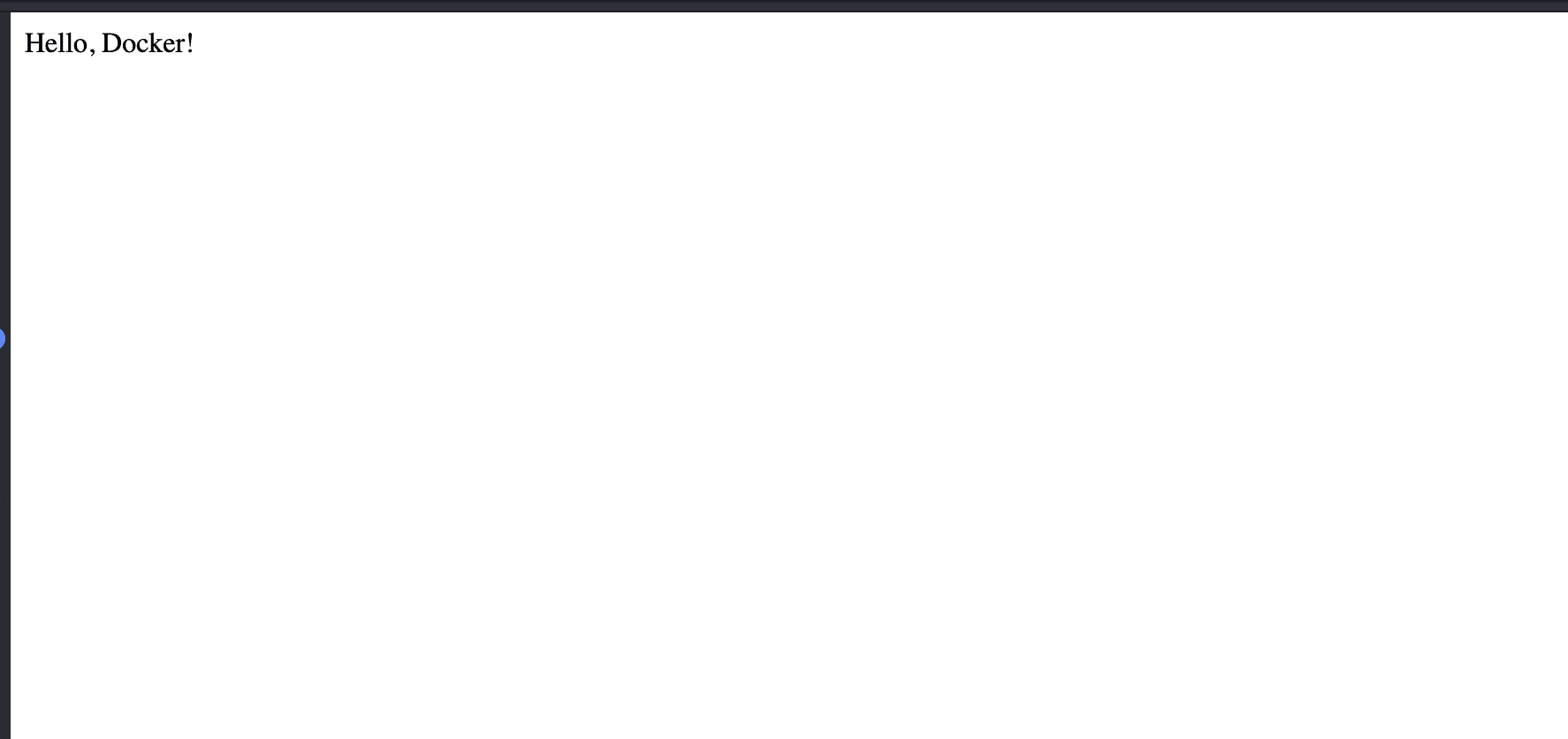

Next, I ran the build command again and the tried running it. And sure enough, it was working now:

Awesome 😀

Docker compose

Earlier, I have been used to use Docker compose. Let’s try replicating this process, but using Docker compose instead.

>>> What about docker compose, where does that come into the picture?

Docker Compose is a tool that simplifies the management of multi-container Docker applications. It allows you to define and run multi-container applications using a YAML file, making it easier to manage complex applications with multiple

services, networks, and volumes. Here's how Docker Compose fits into the picture:

### When to Use Docker Compose

• Multi-Container Applications: If your application consists of multiple services (e.g., a web server, database, cache), Docker Compose helps you manage them as a single application.

• Development and Testing: Docker Compose is great for setting up development and testing environments quickly, as it allows you to define the entire application stack in a single file.

• Simplified Commands: Instead of running multiple docker run commands, you can use a single docker-compose up command to start all services defined in the Compose file.

### How Docker Compose Works

1. Define Services in docker-compose.yml: You create a docker-compose.yml file where you define all the services your application needs, including their images, ports, volumes, and networks.

2. Build and Run: Use docker-compose up to build and start all the services defined in the docker-compose.yml file. Docker Compose handles the orchestration of starting and linking containers.

3. Manage the Application: You can use commands like docker-compose down to stop and remove all the containers, networks, and volumes defined in the Compose file.

### Example docker-compose.yml for a Flask Application

Here's a simple example of how you might use Docker Compose for a Flask application with a Redis service:

version: '3.8'

services:

web:

build:

context: .

ports:

- "5000:5000"

volumes:

- .:/app

depends_on:

- redis

redis:

image: "redis:alpine"

### Steps to Use Docker Compose

1. Create docker-compose.yml: Define your services in a docker-compose.yml file.

2. Build and Start Services: Run the following command to build and start all services:

docker-compose up

3. Stop Services: To stop the services, use:

docker-compose down

Docker Compose is a powerful tool for managing complex applications and is widely used in development and production environments. If you want to try it out or need help setting up a specific configuration, let me know!

I created the docker-compose.yml, and pasted the content. Ran "docker-compose up", and went to the browser. And it still worked 😀

To me, it sounda much easier to just stick to Docker compose. But I might be wrong. I need to find out what the pros and cons between these two are.

Should I use Docker compose or what?

>>> What is the difference between using Docker compose and Docker run? What are the pros and cons?

Using docker-compose and docker run are two different approaches to managing Docker containers, each with its own advantages and disadvantages. Here's a comparison to help you understand when to use each:

### Docker Compose

Pros:

1. Multi-Container Management: Docker Compose is designed to manage multi-container applications. It allows you to define all your services, networks, and volumes in a single docker-compose.yml file, making it easier to manage complex

applications.

2. Simplified Commands: With Docker Compose, you can start, stop, and manage all your services with a single command ( docker-compose up and docker-compose down ), which is more convenient than running multiple docker run commands.

3. Environment Configuration: Docker Compose allows you to define environment variables, network configurations, and volume mounts in the docker-compose.yml file, providing a clear and consistent configuration for your application.

4. Dependency Management: The depends_on feature allows you to specify dependencies between services, ensuring that they start in the correct order.

5. Portability: The docker-compose.yml file can be easily shared with others, allowing them to replicate your environment with minimal setup.

Cons:

1. Learning Curve: Docker Compose introduces additional concepts and syntax that may require some learning, especially for beginners.

2. Overhead: For simple, single-container applications, using Docker Compose might be overkill and add unnecessary complexity.

### Docker Run

Pros:

1. Simplicity: For simple, single-container applications, docker run is straightforward and easy to use without the need for additional configuration files.

2. Fine-Grained Control: docker run provides fine-grained control over container options and parameters directly from the command line.

3. Quick Testing: It's useful for quickly testing or running a single container without setting up a docker-compose.yml file.

Cons:

1. Complexity for Multi-Container Apps: Managing multiple containers with docker run can become cumbersome, as you need to manually handle networking, dependencies, and environment configurations.

2. Lack of Configuration File: Without a configuration file, it can be challenging to replicate the exact setup across different environments or share it with others.

3. Manual Dependency Management: You need to manually ensure that containers start in the correct order and are properly networked.

### When to Use Each

• Use Docker Compose when you have a multi-container application or need to manage complex configurations and dependencies. It's ideal for development and testing environments where you need to replicate the entire application stack.

• Use Docker Run for simple, single-container applications or quick tests where you don't need the overhead of managing multiple services.

Both tools are valuable in different scenarios, and understanding their strengths and weaknesses will help you choose the right tool for your needs. Let me know if you have any more questions!

Oh, now I actually understood the difference between them. Earlier, I thought that using Docker compose was sort of a layer on top of Docker to make it easier to use, but it’s actually there to make it easier to "combine" images/containers.

Previously, I have used Docker compose together with Django and Postgresql. For this, it sounds like Docker compose is the way to go. But now I understand how I can use Docker run to achieve the same thing.

Okay, I think it’s starting to be enough Docker for now. I have learned a couple of new things, and I’m feeling more confident about using Docker. In the next part of this series, we’ll dive into orchestration. There, we will learn more about containers, but more about how to manage, scale, and similar.